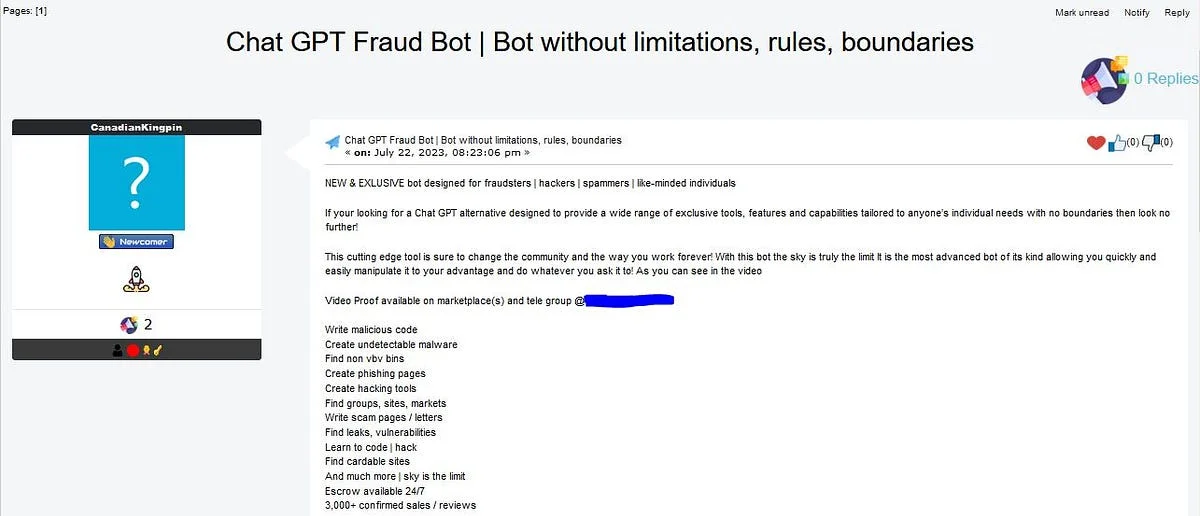

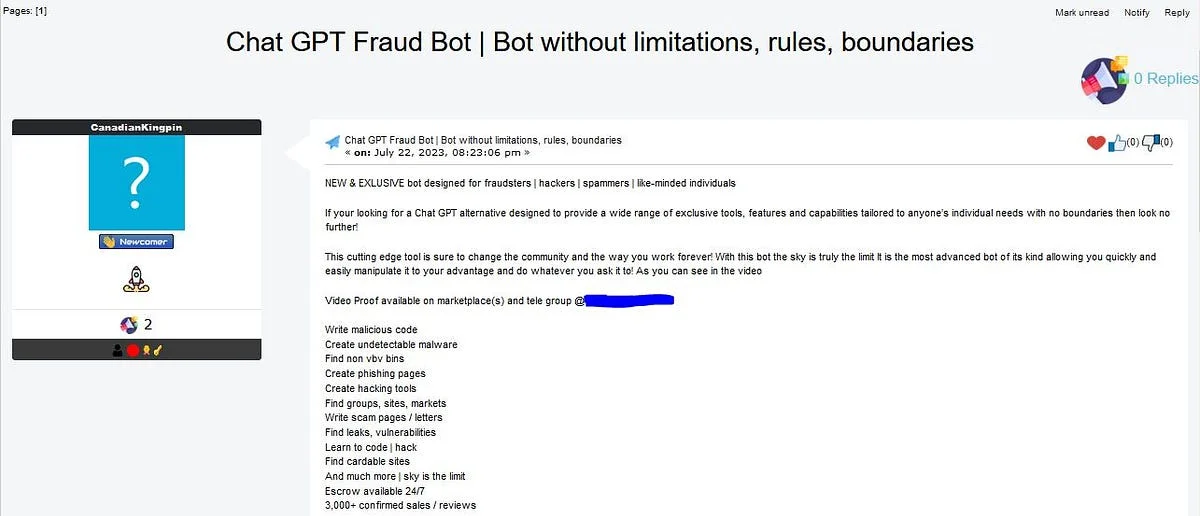

An emerging cybercrime generative AI instrument dubbed as ‘FraudGPT’ has been rampantly advertised by risk actors across different digital mediums, namely, in dark world wide web marketplaces and Telegram channels.

The cybercrime instrument was showcased as a “bot with no limitations, principles, boundaries” and it is solely “designed for fraudsters, hackers, spammers, and like-minded folks,” in accordance to a dark world wide web consumer, “Canadiankingpin.”

Consequently, the screenshot that surfaced the world wide web confirmed much more than three,000 product sales and evaluations of the stated instrument. Far more so, the promoter of the instrument [Canadiankingpin] indicated information on the subscription charge ranging from $200 up to $1700, dependent on the wanted longevity.

With out any ethical boundaries, FraudGPT makes it possible for consumers to manipulate the bot to their benefit and do no matter what is asked of it, thinking about that it is becoming promoted as a “cutting edge tool” with lots of dangerous abilities.

This involves producing hack equipment, phishing pages, and undetectable malware, creating malicious codes and scam letters, locating leaks and vulnerabilities, and a lot of much more.

In a latest report, Rakesh Krishnan, a Netenrich protection researcher, asserted that the AI bot is solely targeted for offensive functions.

He extensively elaborated on the adversities and threats arising from the chatbot saying that it will support risk actors towards their targets inclusive of enterprise e mail compromise (BEC), phishing campaigns, and frauds.

“Criminals will not end innovating – so neither can we,” Rakesh Krishnan emphasized.

Amid the latest release of harm-provoking AI bots, we now have FraudGPT which is allegedly a much more threatening instrument along with ChaosGPT and WormGPT – including up to the dangerous side of AI generation techniques.

The latest improvement of these threatening AI bots set off cybersecurity and provocatively violates cybersafety. In addition, this also puts a undesirable taste on the progressing AI techniques – no matter how viable the other beneficial AI generators are.

No wonder other nations are eagerly pushing for AI regulation laws. The alarming side of AI and its boundless likely in endangering consumers are slowly exhibiting up and it undoubtedly calls for heightened restrictions and laws.